Early Insights Fine-Tuning GPT 3.5 from Mendable.ai

Finetuning insights with Mendable data

At Mendable.ai, we are committed to making search more intelligent, efficient, and context-aware for your business. It’s a constantly evolving landscape out there, with new tools and technologies continually shifting the game. That’s why we were particularly excited about OpenAI’s recent update that introduces fine-tuning capabilities for GPT-3.5.

The Buzz Around Fine-Tuning

For the uninitiated, fine-tuning is a feature that allows you to tweak pre-trained language models like GPT-3.5 to better suit specific use-cases or contexts. The ramifications of this are enormous: improved answer performance, contextual understanding, and a more personalized experience for the end-users. However, like any promising technology, the devil is in the details. Here are some early insights we gained from our experiments:

The Challenge of Gathering a Good Dataset

The cornerstone of effective fine-tuning is an appropriate training dataset. But gathering such a dataset isn’t always easy or straightforward. There are platforms like Mendable.ai (yes, that’s us!) and LangSmith by Langchainai that allow you to capture user-rated completions. The value of this feature can’t be overstated. Real-time, user-validated data can significantly enhance the performance of a finely tuned model.

Of course, manual or synthetic dataset creation is an option if you’re starting from scratch, but it tends to be time-consuming and less accurate.

Transforming Training Data Matters

Once you’ve amassed a decent dataset, your next mission is to transform it strategically. You might wonder why this is important. Well, specific transformations can lead to marked improvements in performance. For example, eliminating repeated instructions but retaining the same desired completion can make the model more efficient, saving you valuable tokens in the process.

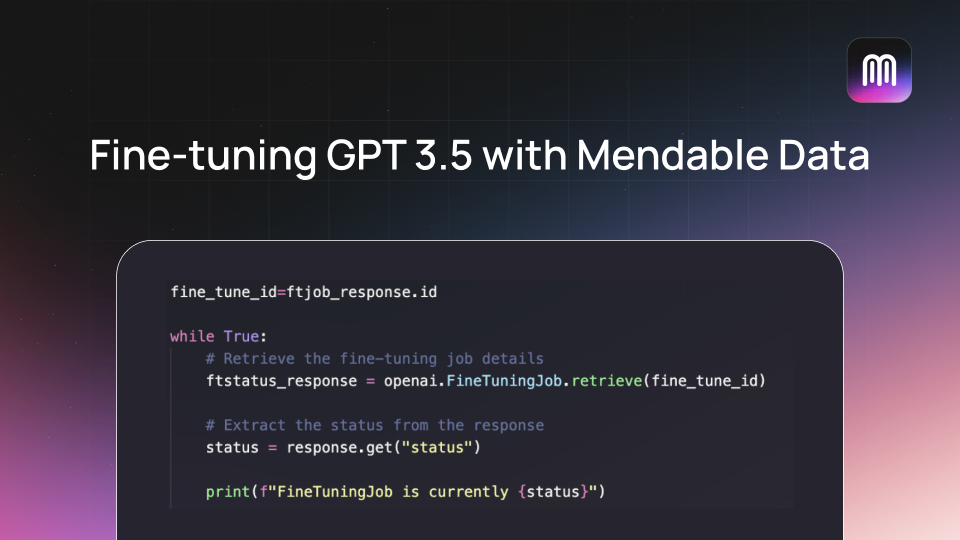

OpenAI Makes It Easy

After these preliminary steps, the fine-tuning process itself is surprisingly straightforward, thanks to OpenAI’s intuitive API. A shoutout to the OpenAI team for their stellar work. We managed to get fine-tuning up and running in under an hour, which speaks volumes about the API’s usability.

A Peek Into the Future

While we’re talking about fine-tuning, it’s hard not to consider the future possibilities this feature holds. Could we see an integrated fine-tuning option right within Mendable.ai’s platform for OpenAI and even open-source models? Well, we don’t want to give too much away, but let’s just say it’s an exciting avenue we’re exploring.