Everything you need to know about Mendable: Build and deploy AI Chat Search

A complete guide to setting up a successful AI chat bot

Introduction to Mendable

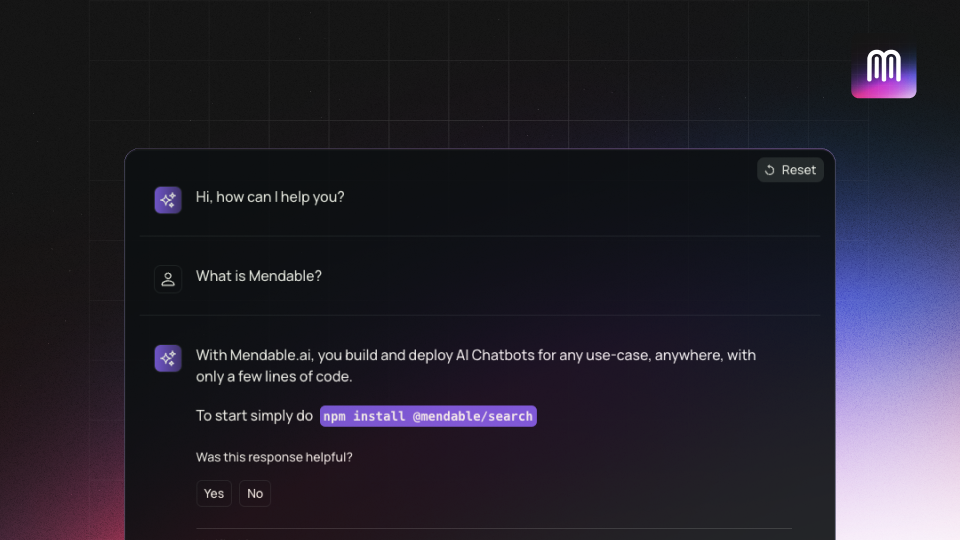

Mendable is a platform that provides chat-powered search functionality to businesses. It’s designed with a developer-centric approach, offering a series of powerful and highly customizable components. Another important aspect of Mendable’s design is its data agnosticism. This means that Mendable can work with many types of data, regardless of its structure or format. You can ingest data through a simple online GUI or through Mendable’s API, and you can easily add, modify, or delete different types of sources. This makes it easy to get Mendable up and running with your existing data sources.

At the heart of Mendable is a robust API that integrates LLM capabilities into your applications. This easy-to-use interface connects your data sources with the chat interface, allowing you to deploy complex queries, retrieve comprehensive answers, and create a user experience that’s truly intuitive and engaging.

Mendable also comes with an interactive user interface that allows for team collaboration, easy ingestion of data, customization of models, and assembly of components, all with a user-friendly and intuitive design.

How Can I Make the Most out of Mendable?

Mendable is specifically designed to be modular, so you have the power to make your own custom chatbot solution in a fraction of the time. This modular nature and data agnosticism makes it easy to get up and running allowing you to customize your projects to fit the needs of individual teams. To make the most out of Mendable, we suggest reading up on our resources at mendable.ai/blog as well as our docs. Additionally, you can contact the founders whenever you’d like at eric@mendable.ai!

Setting up the Environment

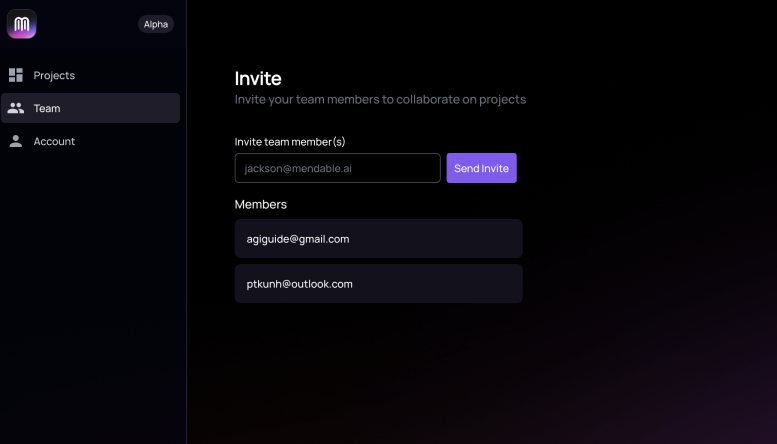

Invite Your Team

If you are part of a team, you can invite your team members! To do this, go to the team section of the dashboard and enter the emails of the members you’d like to have as a part of your project.

Ingesting Your Materials

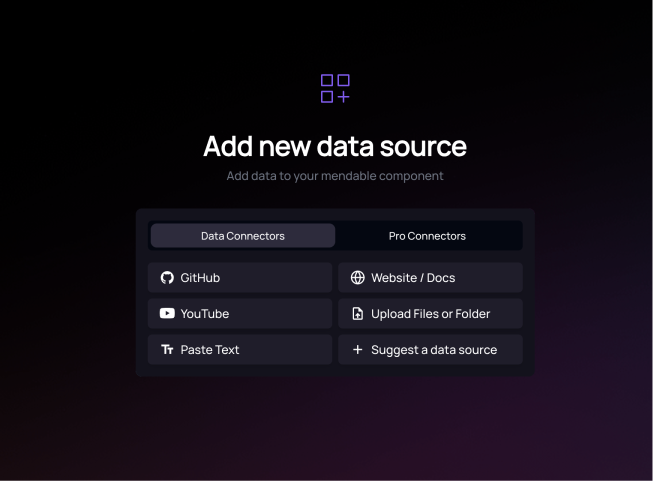

Mendable offers a variety of data connectors that you can use to ingest your data. These data connectors are:

- Website Crawler

- Documentation Websites

- GitHub

- YouTube

- Sitemap

- OpenAPI Spec

- Files (markdown, txt, and pdf)

- Pasting Text We also offer a few OAuth connectors:

- Confluence

- Google Drive

- Notion

- Zendesk

If you are looking for one that is not listed, we are happy to add it for you! Please reach out to us

Ingest via the API

If you’d like to ingest via the API, you can do so by going to our docs.

Reingestion

You need to update your data, and we want to make sure that we keep the bot updated as well. In this case, we can set up automatic reingestion. We are currently working on adding this to the self serve, but for now please reach out to eric@mendable.ai to get this set up. If there are any data connectors that aren’t available, or ones that you are having issues with, feel free to reach out to us!

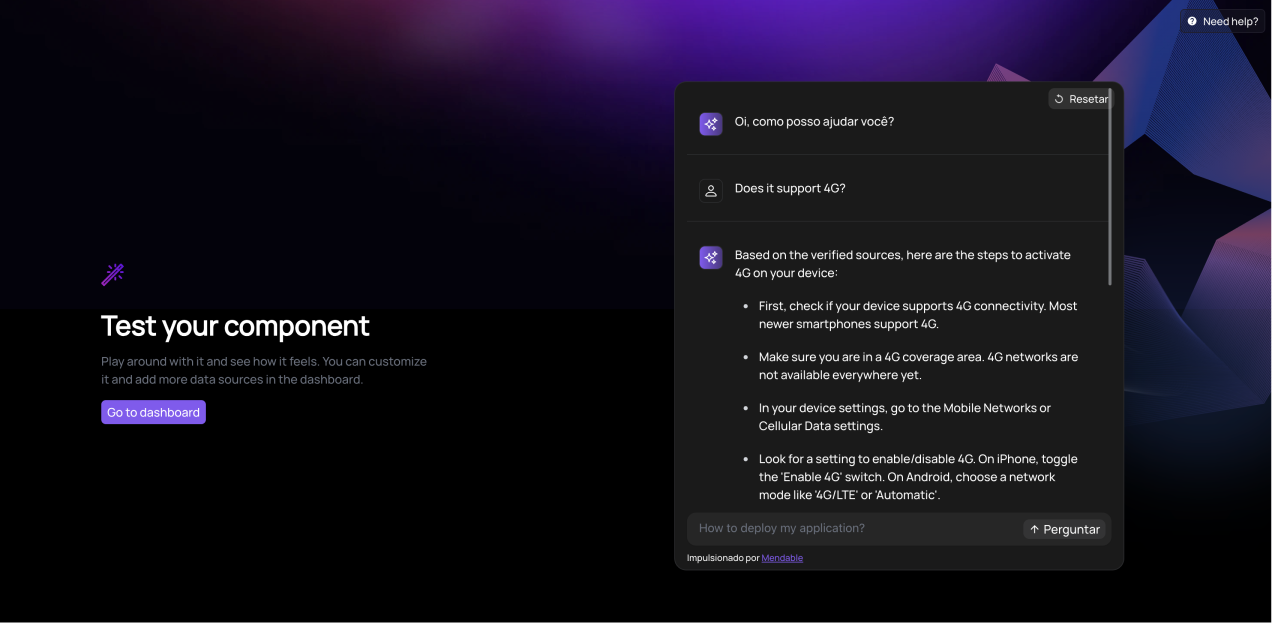

Your First Test

So you’ve signed up, invited your team, and ingested the relevant data. Now it’s time to make your first test! In terms of best practices, we suggest a few things:

- Start out by trying a few questions

- Choose your model

By default, we offer three different models: GPT4, Claude-2, and GPT3.5. Overall, GPT4 has the best performance and has the largest context window. This is especially relevant if you have a large number of documents in your project.

GPT3.5 is the fastest model (the answers generate quicker than GPT4). However, it loses context, so it is best used with minimal documents.

Claude-2 is a great alternative to GPT4. It doesn’t perform as well, but is better than GPT3.5 and is also cheaper. Additionally, it is a very good model for making summaries and lists.

If you’d like, you can use duplicate project in the project settings of the dashboard to create three identical projects, and then you can test a set of questions against all three models.

- Come up with an evaluation set

From our experience, we’ve seen teams have the most success when they come up with a list of questions (around 20). These should be real questions you get from your users, or ones that are designed to ensure the ingestion process was successful. As you test, you can rate the response as

goodorbad. This will allow you to do actions such asteach modelas well as evaluate the data ingested. Feel free to reach out to us if you have any questions on evaluation!

Troubleshooting

Sometimes you don’t get exactly the answers you are looking for. There could be a few reasons why this is the case.

-

The bot is saying it couldn’t find information on the topic you asked about This is likely due to either an ingestion issue or a non-relevant query. For example, if I were to add my API docs and ask, “who is the president of the United States?”, we won’t pick up any sources that relate to that query. Additionally, if your API docs weren’t ingested correctly, this may be an issue in the ingestion process. By looking at the

chunkson the data sources that were retrieved, you should be able to identify this issue. -

Hallucinations If the answer seems “sort of” correct, or they are made up, this is likely due to hallucinations. This occurs more often if you have your model creativity set to

normal. It would be best to check the sources that were retrieved in the answer and check to make sure the answer is present. If not, it could have been an ingestion issue. If the chinks are present, then usingteach modelto tailor the answer would be the best solution. -

The answer is mostly correct, but could use some additional detail. Sometimes you get an answer you like, but that extra bit of context would make it perfect. In this case, you can use the

teach modelfeature to add that context.

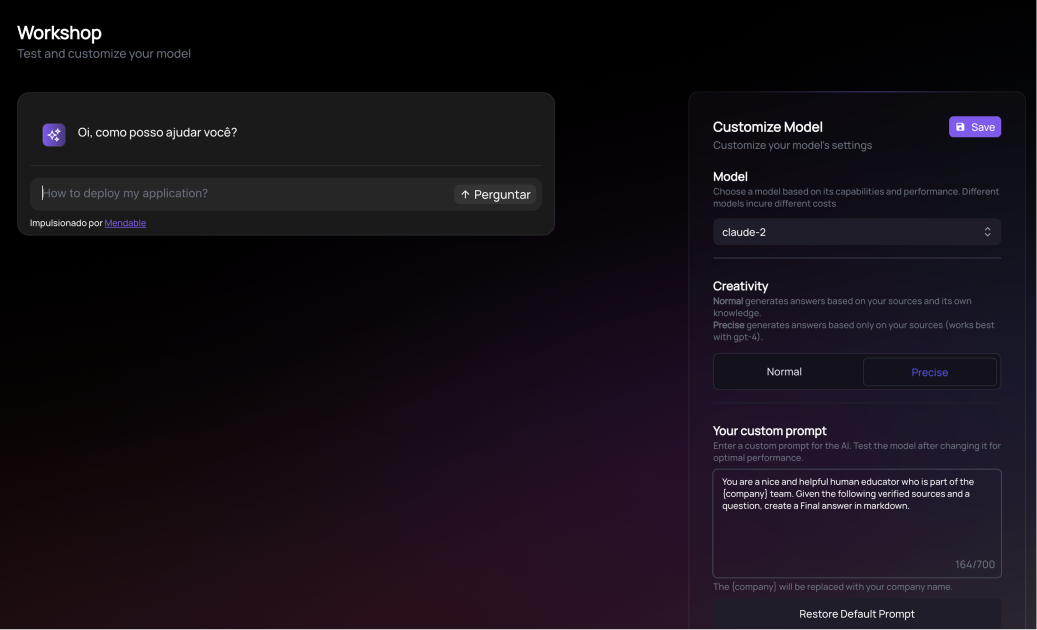

Customizing the Model

You can customize the model in Mendable in several ways to fit your specific needs. These customizaitons can be found in the workshop section of the dashboard. Some of these include:

Model Choice:

We offer GPT3.5, Claude-2, and GPT4 out of the box. Additionally, bring your own keyfunctionality is available for enterprise plans.

Creativity:

The two options for model creativity are precise and normal

Precise and normal modes differ in one way. Normal uses the underlying training data for the LLM models to help answer questions, while precise only uses the ingested data sources to answer. If your use case involves “creativity”, such as assistance with code generation, then normal is the preferred option. If your use case specifically requries accurate and focused answers, then precise would be the preferred option.

Custom Prompt:

The custom prompt allows you to control the voice of your model as well as the structure. Additionally, you can use it to do hallucination gatekeeping. Feel free to read more on our blog post about the custom prompt: https://www.mendable.ai/blog/customprompt

Teach Model

Teach model is a powerful feature that enables you to “take control” over what Mendable outputs. There are three use cases in which teach model tends to be the most powerful.

- You want to add extra detail in the answer

- You want to ‘edit’ an incorrect answer

- You want to provide additional information to your customers that doesn’t necessarily “fit” anywhere in the documentation.

When you’re teaching the model, you can access this by going to the

conversationssection of the dashboard and clicking on an individual message, or by going to theteach modelsection. Theteach modelsection is divided into three sections: poorly rated, well rated, and taught questions. In this case, it is pretty easy to go into thepoorly ratedquestions, evaluatate why they were not helpful, and quickly edit the answer if necessary. Once an answer is edited, this answer will be prioritized if the exact question (or a semantically similar one) is asked in the future. Additionally, we can add connect specific documentation sources with the answer, if you want to link your customers somewhere to read more.

Exporting Conversation Data

Mendable also allows conversation data to be exported. As of right now, this is a feature that is reserved for enterprise contracts. If this is a necessity, please reach out to the Mendable team at help@mendable.ai!

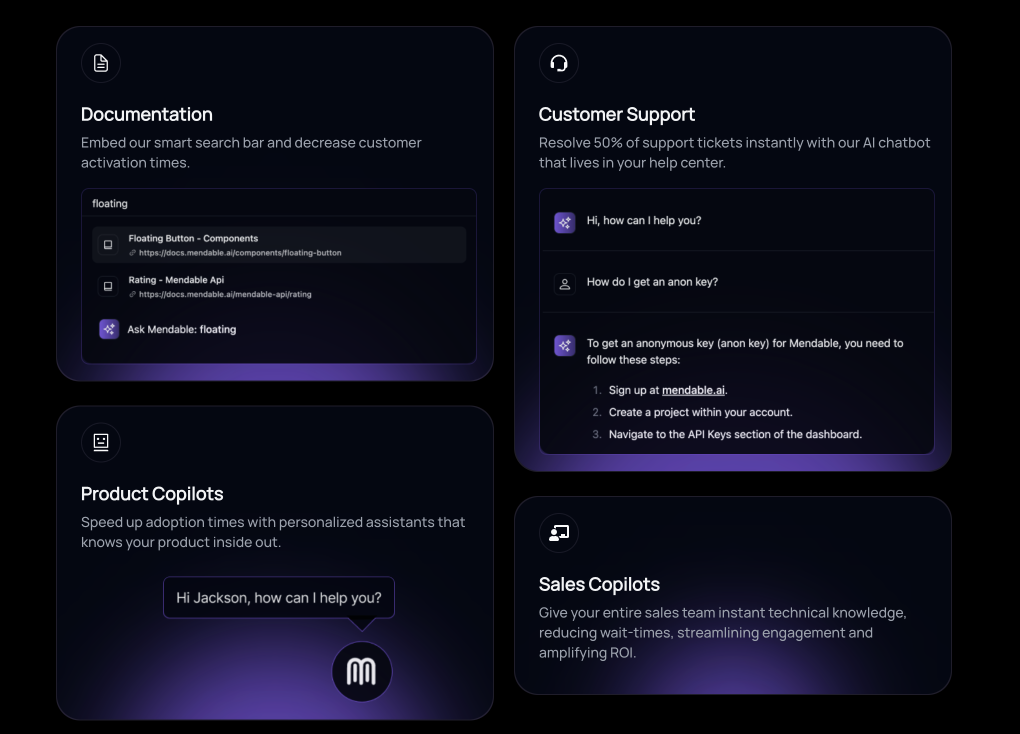

Deployment

Now you’ve uploaded your content, tested, taught the questions that you needed to, and you’re ready to deploy to production! Mendable offers a variety of different ways to implement your AI Chat Search.

Components

Mendbale offers a variety of pre-built components in React as well as vanilla JS, making it easy to embed almost anywhere. Additionally, if you prefer to build your own custom front end, you can use our API.

White Labeling

Mendable also offers white labeling for the enterprise plan. However, if white labeling is a requirement, please reach out to help@mendable.ai to get this set up.

Intergrations

As well as embedding our component, we also offer a pre-built discord bot and slack bot to easily integrate Mendable in your communities and teams.

Protection and Security

In the project settings section of the dashboard, Mendable offers a couple of protections to keep your bot secure. A couple of things you can do are whitelisting domains and IP address Rate Limiting

Additional Questions and Roadmap Items

If you have any additional questions, or would like to learn more about what Mendable has planned for the future, please reach out to us! You can speak to the founder at help@mendable.ai!